Join the crew.

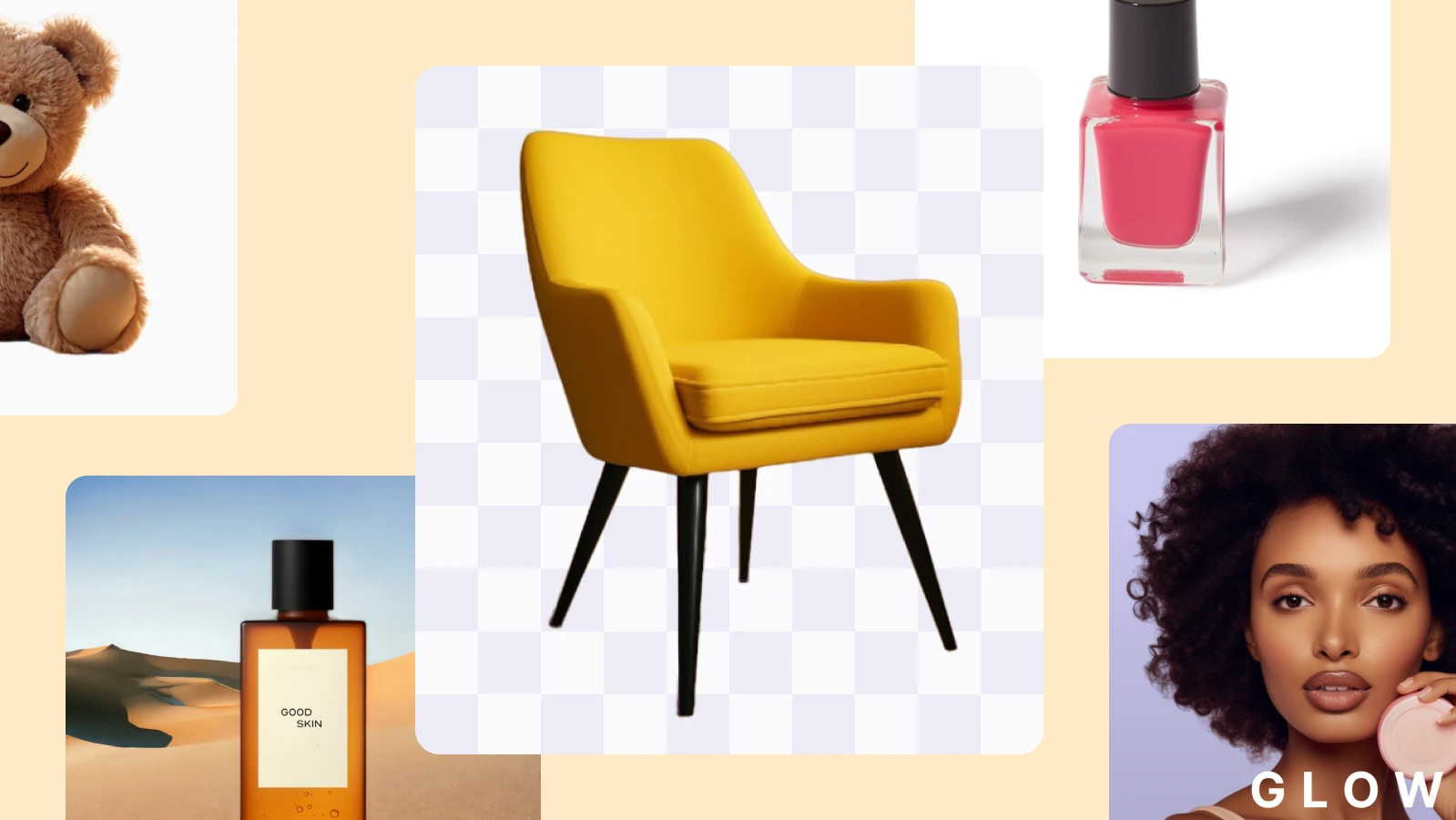

Photoroom provides photo editing software powerful enough to create outstanding images yet simple enough to be used without any training. We leverage deep learning to translate pixels into objects, drastically simplifying non-creative tasks such as removing backgrounds from images or removing objects.

Our mission: enable entrepreneurs and small businesses to compose images that stand out.

This is us 👇

We build photo editing apps

The Photoroom mobile application is used daily by millions of users to create stunning images for their businesses. With just a few taps they can remove image background, add text, and effects.

We take much pride in building our apps and got featured multiple times by both Apple and Google.

Try Photoroom:

Who we are

Small team, BIG impact

We value quality over quantity when it comes to hiring. Photoroom is composed of individuals who care about work-life blend and personal growth.

Remarkable growth

Created in 2020, Photoroom is profitable and used by millions every month. We have the foundations and ambition to build a healthy fast-growing company.

Trusted by leaders

Our investors and advisors includes experts in the field: Yann LeCun, world-class accelerators: Y-Combinator, and venture capitalists: Nico Wittenborn.

Our values

Candid feedback

We share feedback with care to challenge the status quo and keep improving what we do and how we do it.

Ship fast to learn

Our focus on “done” rather than perfect helps us ship fast and learn faster.

Build it, own it

We are all trusted experts who take pride in our craft. We value follow-through, and having an impact on the users, no matter what role we’re in.

Stay open

We keep an open mind to new ideas and we work in public, in a transparent manner.

They talk about us

Apple

Apple wrote about the history of Photoroom. From our Station F roots to translating the app early on.

TechCrunch

TechCrunch covered our Series B ($43M) as well as our first Series A ($19M) and our last Generative AI features launch

Forbes

Forbes: Photoroom, An App That Generates AI Images In One Second, Is Now Worth $500 Million